Voice Capabilities Integration

Currently, Cloud Development provides voice capabilities including Automatic Speech Recognition (ASR) and Text-to-Speech (TTS), both implemented based on Tencent Cloud's speech-related APIs.

Introduction to Speech Recognition

Provides a speech-to-text feature, currently supporting the one-sentence recognition scenario (recognizing short audio files within 60 seconds) (API reference)

Limitations:

- The audio duration must not exceed 60 seconds, and the audio file size must not exceed 3MB.

- Speech input recognition scenario types: Chinese General / Chinese-English-Cantonese / Chinese Medical / English / Cantonese

Introduction to Speech Synthesis

Provides a text-to-speech feature, currently supporting long-text speech synthesis scenarios (suitable for reading and broadcasting, with flexible text length support) (API reference)

Limitations:

- Supports speech synthesis for text within 100,000 characters and returns the audio result asynchronously.

- Voice types: General Male Voice / General Female Voice / Advisory Male Voice / Advisory Female Voice / General Male Voice (Large Model) / General Female Voice (Large Model) / Chat Male Voice / Chat Female Voice / Reading Male Voice / Reading Female Voice

How to Use

1. Activate

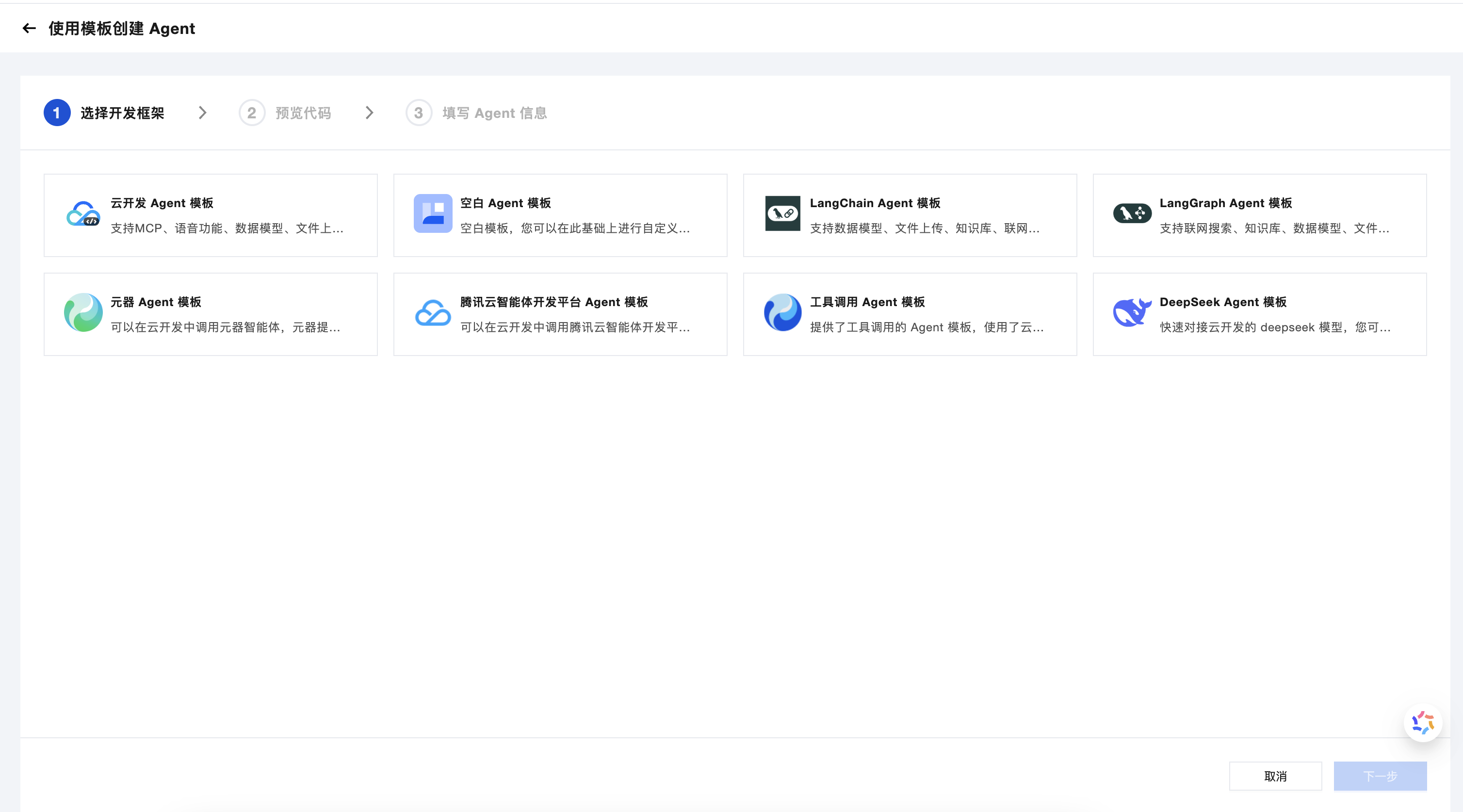

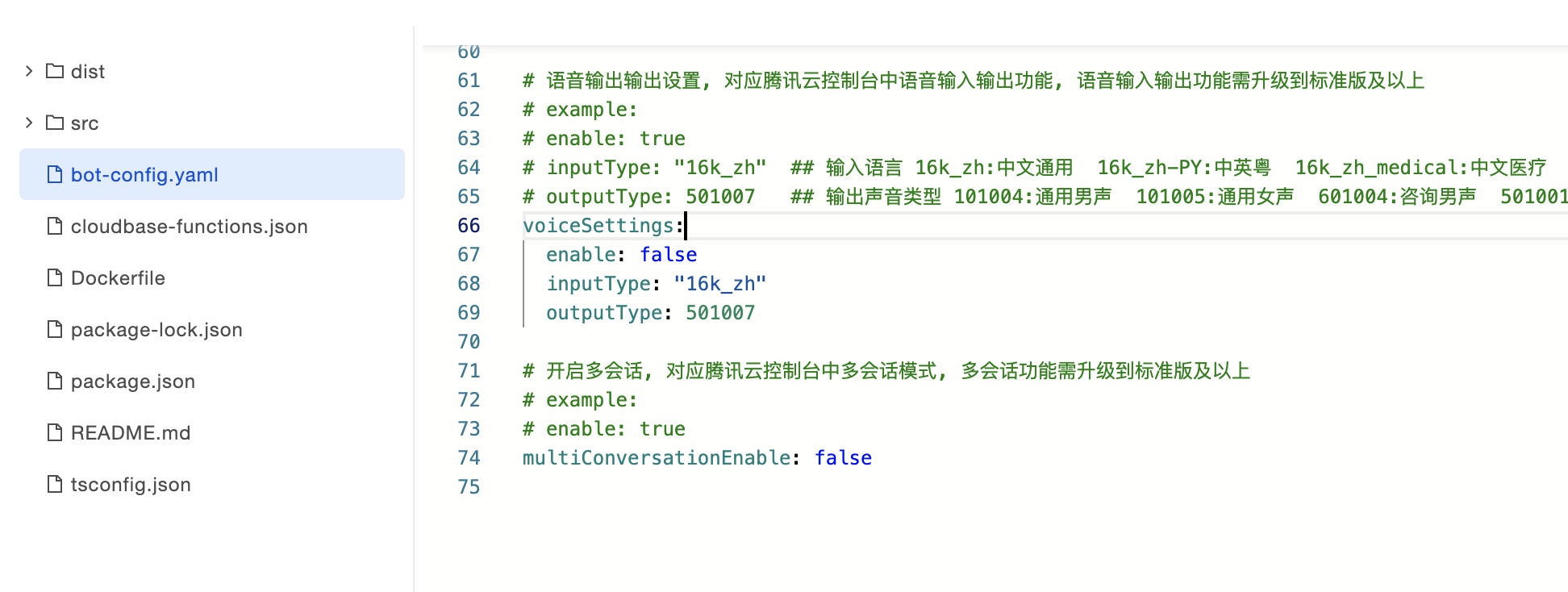

In the Cloud Development Platform AI+, select the "Cloud Development Agent template", and in bot-config.yaml, enable voiceSettings: true.

Cloud Development Standard Edition or higher is required to enable this feature.

Test

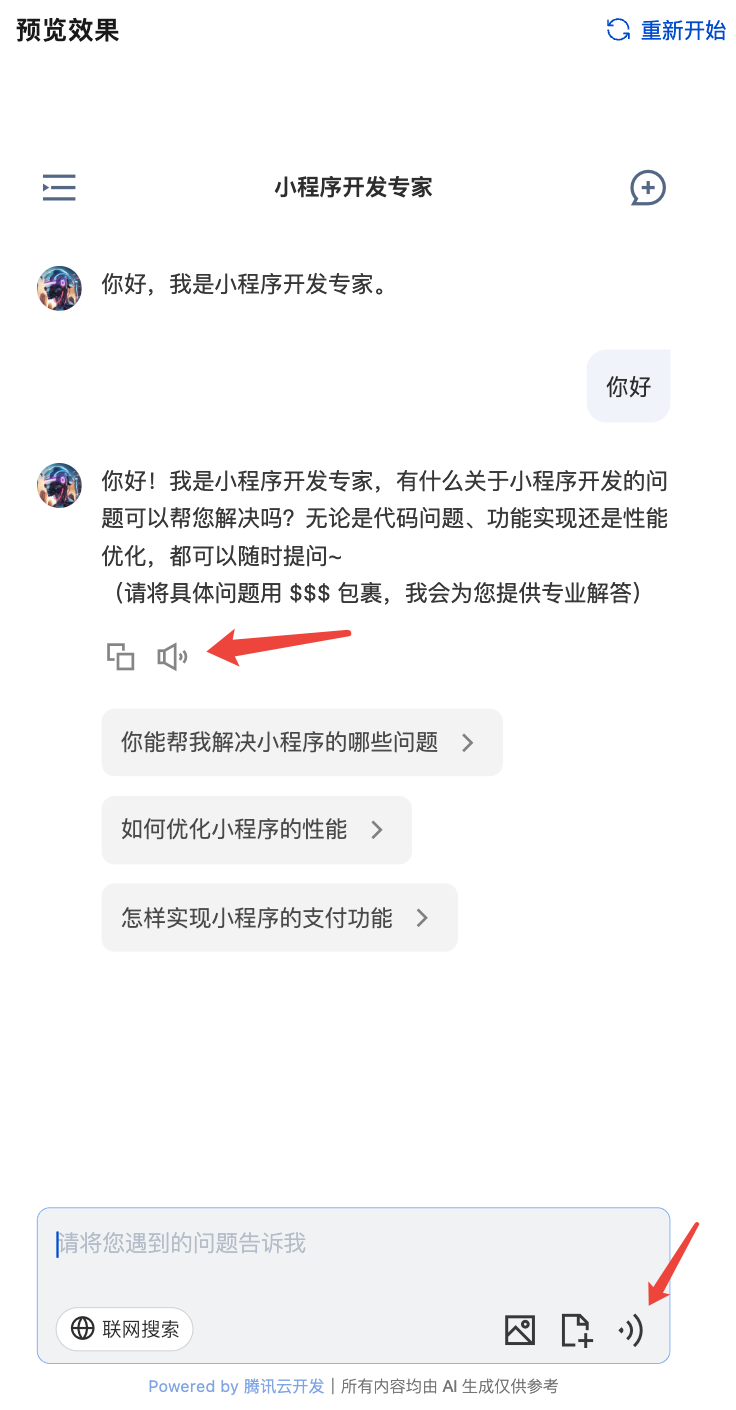

After configuration, you can experience it in real-time in the preview area on the right. The Speech Recognition & Text-to-Speech entry is as shown below:

2. Integration via Components/HTTP API/SDK

2.1 Component Integration

Low-code components have built-in voice capability. Refer to the documentation to integrate the component.

Mini Program source code components have built-in voice capability. Refer to the documentation to integrate the component.

React components have built-in voice capability. Refer to the documentation to integrate the component.

2.2 HTTP API Integration

Refer to HTTP API Documentation

2.3 SDK Integration

Initialize the SDK:

// In the root directory of the Web project, use npm or yarn to install the required packages:

// npm i @cloudbase/js-sdk

// Import the SDK. Here we import the full clousebase-js-sdk, and it also supports importing by modules.

import cloudbase from "@cloudbase/js-sdk";

const app = cloudbase.init({

env: "your-env", // Replace with the actual environment id

});

const auth = app.auth();

await auth.signInAnonymously(); // Or use other login methods.

const ai = app.ai();

// Now you can call the methods provided by the ai module.

Speech to Text:

const res = await ai.bot.speechToText({

botId: "botId-xxx",

engSerViceType: "16k_zh",

voiceFormat: "mp3",

url: "https://example.com/audio.mp3",

});

Text to Speech (Launch Asynchronous Task):

const res = await ai.bot.textToSpeech({

botId: "botId-xxx",

voiceType: 1,

text: "Hello, I am an AI assistant",

});

Query Text to Speech Task Result:

const res = await ai.bot.getTextToSpeechResult({

botId: "botId-xxx",

taskId: "task-123", // obtained from the Text to Speech textToSpeech response

});

Refer to SDK Documentation for method usage.