Import/Export

The "Document Database" provides comprehensive data import/export functionality to help you quickly complete data migration, backup, and analysis tasks. This document will detail how to use collection management features for data import and export operations.

Data Import

The "Data Import" feature supports batch data import, helping you quickly migrate existing data or initialize test data.

Operation Steps

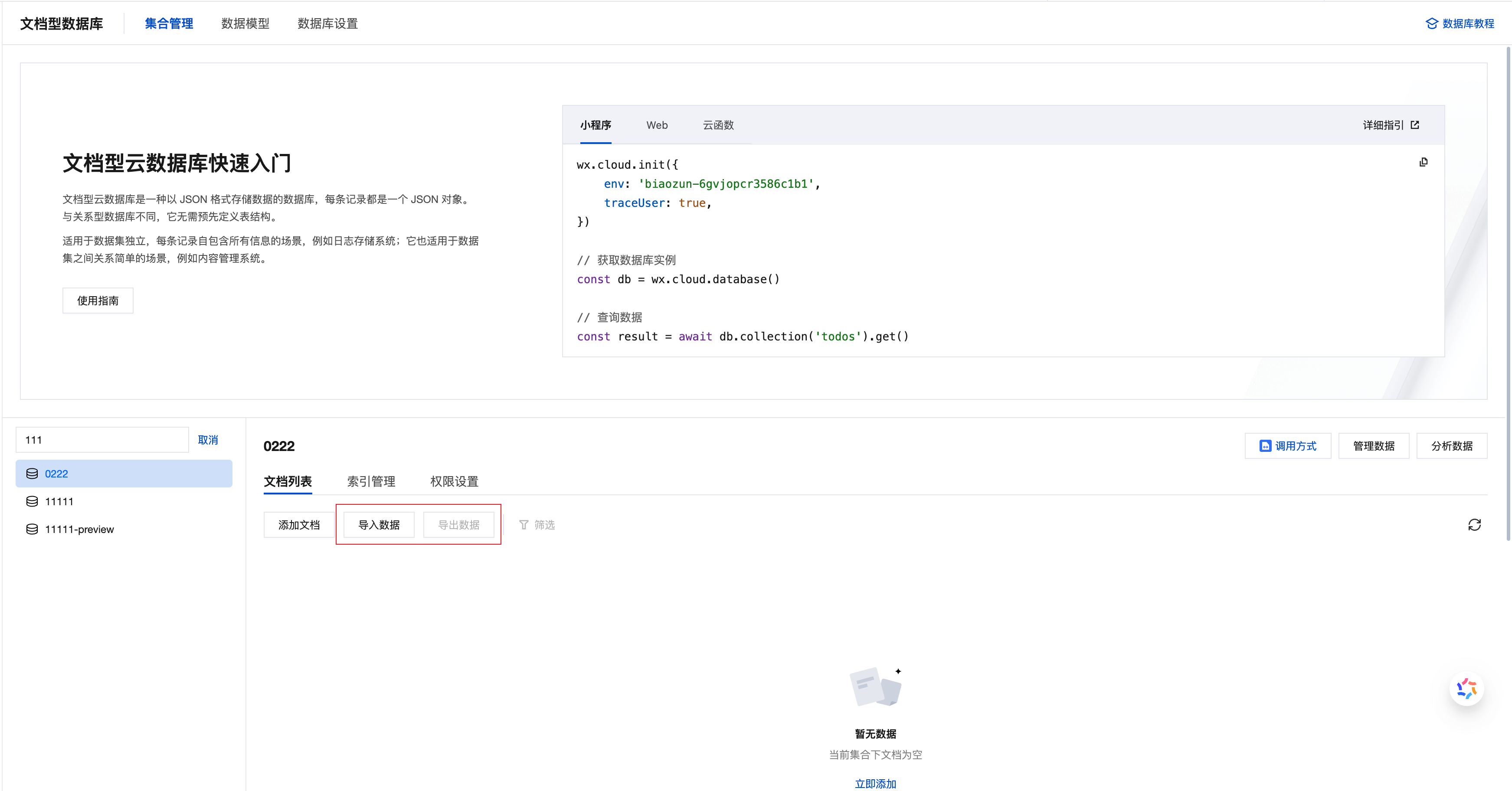

Enter Collection Management: Visit CloudBase Platform/Document Database/Collection Management, select the target collection

Start Import: Click the "Import" button

Select File: Upload the prepared data file (supports JSON and CSV formats)

Configure Import Parameters:

- Select file format (JSON or CSV)

- Select conflict resolution mode (Insert or Upsert)

Execute Import: After confirming the configuration, click "Import" to start data import

Conflict Resolution Modes

When importing data, you need to select the appropriate conflict resolution mode to determine how to handle existing records:

| Mode | Processing Method | Applicable Scenarios | Usage Notes |

|---|---|---|---|

| Insert | Always insert new records | • Fresh data import • Data initialization | Import file cannot contain the same _id as existing records in the database |

| Upsert | Update if exists, insert if not | • Data updates • Incremental import • Data synchronization | Determines record existence based on the _id field |

- First Import: Recommended to use Insert mode to ensure complete data import and avoid accidental overwrites

- Data Updates: Recommended to use Upsert mode to support incremental updates and avoid duplicate data

- Regular Synchronization: Use Upsert mode to achieve continuous data synchronization and updates

Supported File Formats

JSON Format

Encoding Requirements: UTF-8 encoding

Format Description: Uses JSON Lines format, with one complete JSON object per line

Sample File:

{"_id": "user_001", "name": "Zhang San", "age": 25, "email": "zhang@example.com", "role": "admin"}

{"_id": "user_002", "name": "Li Si", "age": 30, "email": "li@example.com", "role": "editor"}

{"_id": "user_003", "name": "Wang Wu", "age": 28, "email": "wang@example.com", "role": "viewer"}

Format Features:

- Each line represents an independent data record

- Supports nested objects and array structures

- Fully preserves data type information

CSV Format

Encoding Requirements: UTF-8 encoding

Format Description: Standard CSV format, first row contains field names (header), subsequent rows contain data records

Sample File:

_id,name,age,email,role

user_001,Zhang San,25,zhang@example.com,admin

user_002,Li Si,30,li@example.com,editor

user_003,Wang Wu,28,wang@example.com,viewer

Format Features:

- Simple structure, easy to edit with tools like Excel

- Suitable for flat tabular data

- Field names and data separated by commas

Format Requirements and Limitations

JSON Format Specifications

| Specification | Specific Requirements | Correct Example | Incorrect Example |

|---|---|---|---|

| Record Separation | Use newline character \n to separate each record | One JSON object per line | Multiple objects on the same line |

| Field Naming | Cannot start or end with ., cannot contain consecutive .. | name, user.id, data.info | .name, name., a..b |

| Unique Keys | Cannot have duplicate key names within the same object | {"id": 1, "name": "Zhang San"} | {"a": 1, "a": 2} |

| Time Format | Use MongoDB ISODate format | {"date": {"$date": "2024-01-15T10:30:00.882Z"}} | {"date": "2024-01-15"} |

Data Integrity Requirements

| Import Mode | _id Field Requirements | Detailed Description |

|---|---|---|

| Insert Mode | Cannot be duplicated | • _id cannot be duplicated within the import file• _id in the import file cannot duplicate existing database records |

| Upsert Mode | Duplicates allowed | • When _id in the file matches an existing database record, it will update that record• When _id does not exist, it will insert a new record |

⚠️ Important Notes:

- If

_idfield is not specified, the system will automatically generate a unique_id- It is recommended to backup data before import to avoid data loss from accidental operations

Import Result Description

After import completion, the system will display detailed import statistics:

- Successfully Imported Records: Number of records successfully written to the database

- Failed Records: Number of records that failed to import

- Failure Reasons: Specific error messages (such as format errors, duplicate

_id, etc.) - Skipped Records: Number of records skipped in Insert mode due to duplicate

_id

Data Export

The "Data Export" feature supports exporting collection data to files, facilitating data backup, analysis, or migration to other systems.

Operation Steps

Enter Collection Management: Visit CloudBase Platform/Document Database/Collection Management, select the collection to export

Start Export: Click the "Export" button

Configure Export Parameters:

- Select export format (JSON or CSV)

- Set field range (optional)

- Choose save location

Execute Export: After confirming the configuration, click "Export" to start data export

Download File: After export completion, download the generated file to local storage

Export Format Configuration

JSON Format

Applicable Scenarios:

- Complete data backup

- Cross-system data migration

- Preserve complete data structure

Format Features:

- Fully preserves data types and structure information

- Supports nested objects and arrays

- Exported file can be directly used for import operations

Field Configuration:

- No Field Specification: Export all fields and data from the collection (recommended for backups)

- Specify Fields: Export only specified fields to reduce file size

Export Example:

{"_id": "user_001", "name": "Zhang San", "profile": {"age": 25, "city": "Beijing"}, "tags": ["vip", "active"]}

{"_id": "user_002", "name": "Li Si", "profile": {"age": 30, "city": "Shanghai"}, "tags": ["normal"]}

CSV Format

Applicable Scenarios:

- Data analysis and statistics

- Excel import processing

- Generate data reports

Format Features:

- Table structure, easy to read

- Compatible with office software like Excel, Numbers

- Data will be flattened

Field Configuration:

- Must Specify Fields: CSV format requires explicitly specifying the list of fields to export

- Nested Field Access: Use dot notation to access nested objects, such as

profile.age,profile.city

Field Configuration Example:

_id, name, age, email

_id, name, profile.age, profile.city, createdAt, updatedAt

Export Example:

_id,name,age,email

user_001,Zhang San,25,zhang@example.com

user_002,Li Si,30,li@example.com

Export Format Comparison

| Comparison | JSON Format | CSV Format |

|---|---|---|

| Field Specification | Optional (exports all by default) | Must specify |

| Data Integrity | Fully preserves all types and structures | Flattened, loses nested structure |

| File Size | Relatively larger | Relatively smaller |

| Readability | Suitable for technical personnel | Suitable for business personnel |

| Re-import | Can be directly imported | Requires format conversion |

| Applicable Tools | Code editors, database tools | Excel, Numbers, data analysis tools |

Use Cases and Best Practices

| Use Case | Recommended Format | Configuration Recommendations | Description |

|---|---|---|---|

| Complete Data Backup | JSON | No field specification, export all data | Preserves complete data structure for easy recovery |

| Cross-system Migration | JSON | No field specification | Ensures data migration integrity |

| Data Analysis | CSV | Specify fields needed for analysis | Facilitates processing with tools like Excel |

| Generate Reports | CSV | Specify fields required for reports | Quickly generate business reports |

| Large Data Export | JSON or CSV | Export in batches, 10,000-50,000 records per batch | Avoid exporting too much data at once |

| Nested Data Export | JSON | No field specification | CSV cannot fully preserve nested structures |

- Regular Backups: Recommended to export complete data in JSON format weekly as backup

- Large Datasets: When data exceeds 100,000 records, recommended to export in batches

- Nested Data: When containing complex nested structures, prioritize JSON format

- Business Analysis: When using Excel for analysis, use CSV format and specify key fields