Large Model Configuration Guide

This document guides you on how to access and configure various large models in the cloud development platform.

CloudBase has pre-integrated Hunyuan and DeepSeek into the platform.

Integrating Large Models

Custom models only support large model interfaces compatible with the OpenAI protocol.

Required Parameters

Accessing custom models requires preparing the following three key parameters.

- BaseURL: Request URL for the large model API (interfaces compatible with the OpenAI protocol)

- APIKey: Key for accessing the large model API

- Model Name: specific model identifier, such as

hunyuan-turbos-latest,deepseek-chat, etc.

Integration Steps

- Obtain BaseURL and API Key

The following provides integration examples for hunyuan and DeepSeek. For other large models, please refer to the corresponding model vendor's documentation to obtain them.

| Model | Model Provider | BaseURL Example | Model Name | Billing Related |

|---|---|---|---|---|

| hunyuan | Tencent Hunyuan Large Model | https://api.hunyuan.cloud.tencent.com/v1 | Refer to Hunyuan Model List | Refer to Billing Documentation |

| DeepSeek | Tencent Cloud Intelligent Agent Platform/DeepSeek | https://api.lkeap.cloud.tencent.com/v1 | Refer to DeepSeek Model List | Refer to Billing Documentation DeepSeek only supports enabling postpaid |

| DeepSeek | DeepSeek Official | https://api.deepseek.com/v1 | Refer to DeepSeek Model List | Refer to DeepSeek Documentation |

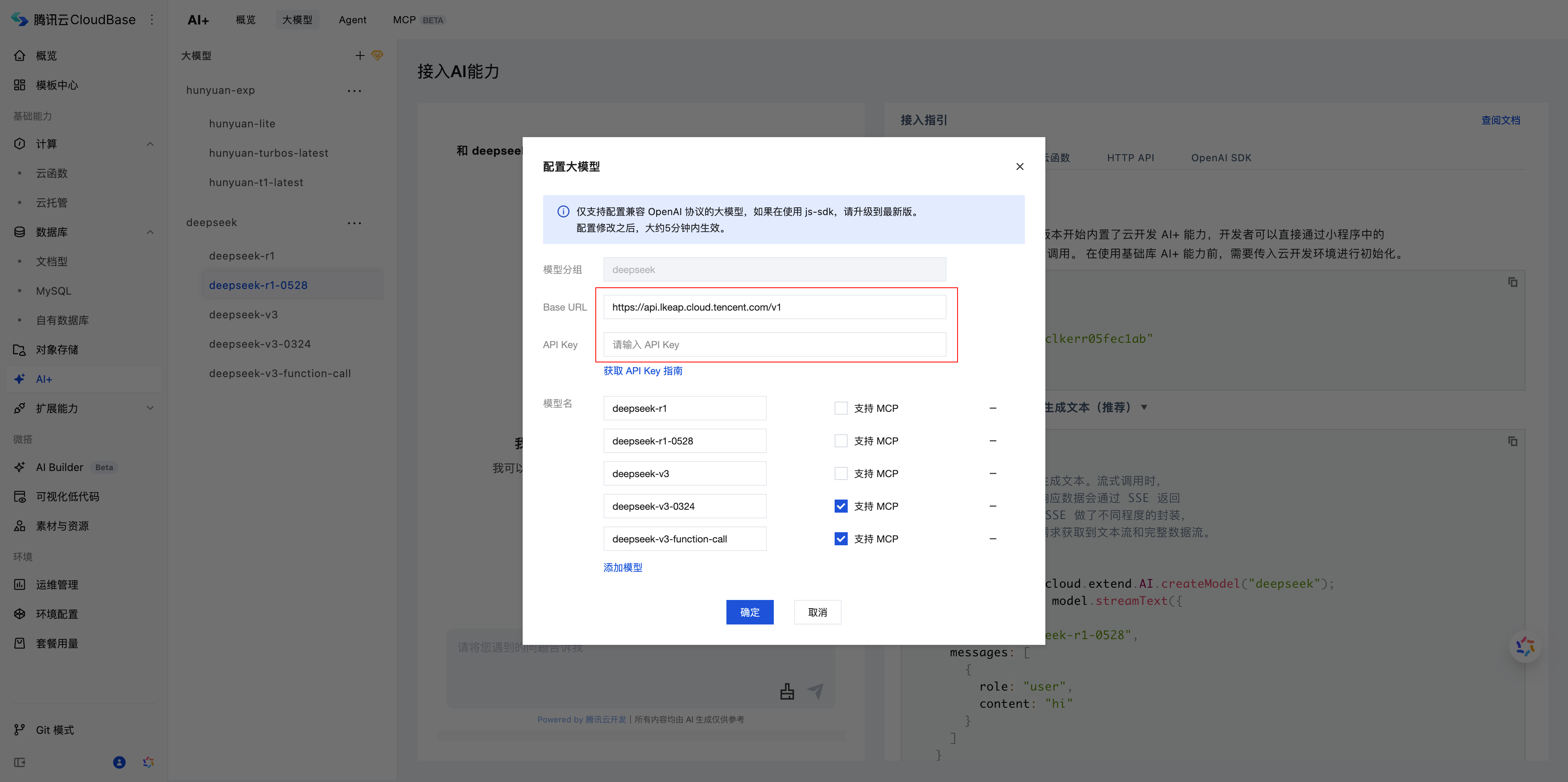

Configure in the platform

- Go to the Cloud Development/ai+ console

- Click "Add" or "Modify Configuration"

Fill in BaseURL and API Key

The Cloud Development platform has preconfigured the model names for Tencent Hunyuan Large Model and Tencent Cloud Intelligent Agent Platform/DeepSeek.

If you need to access other large models, please fill in the corresponding model name in the Model Name field.

Access Example

Official DeepSeek

Obtain BaseURL and Model Name

Access DeepSeek API Documentation

Confirm BaseURL:

https://api.deepseek.com/v1View available Model Name

deepseek-chat(corresponds to DeepSeek-V3-0324)deepseek-reasoner(corresponds to DeepSeek-R1-0528)

Obtain API Key

- Log in to DeepSeek Console

- Create and obtain an API Key on the APIkeys page

Configuration in Cloud Development Platform

Go to the CloudBase/ai+ console

Click the New Large Model button

Fill in the following information:

- Group name (custom)

- BaseURL

- API Key

- Model Name

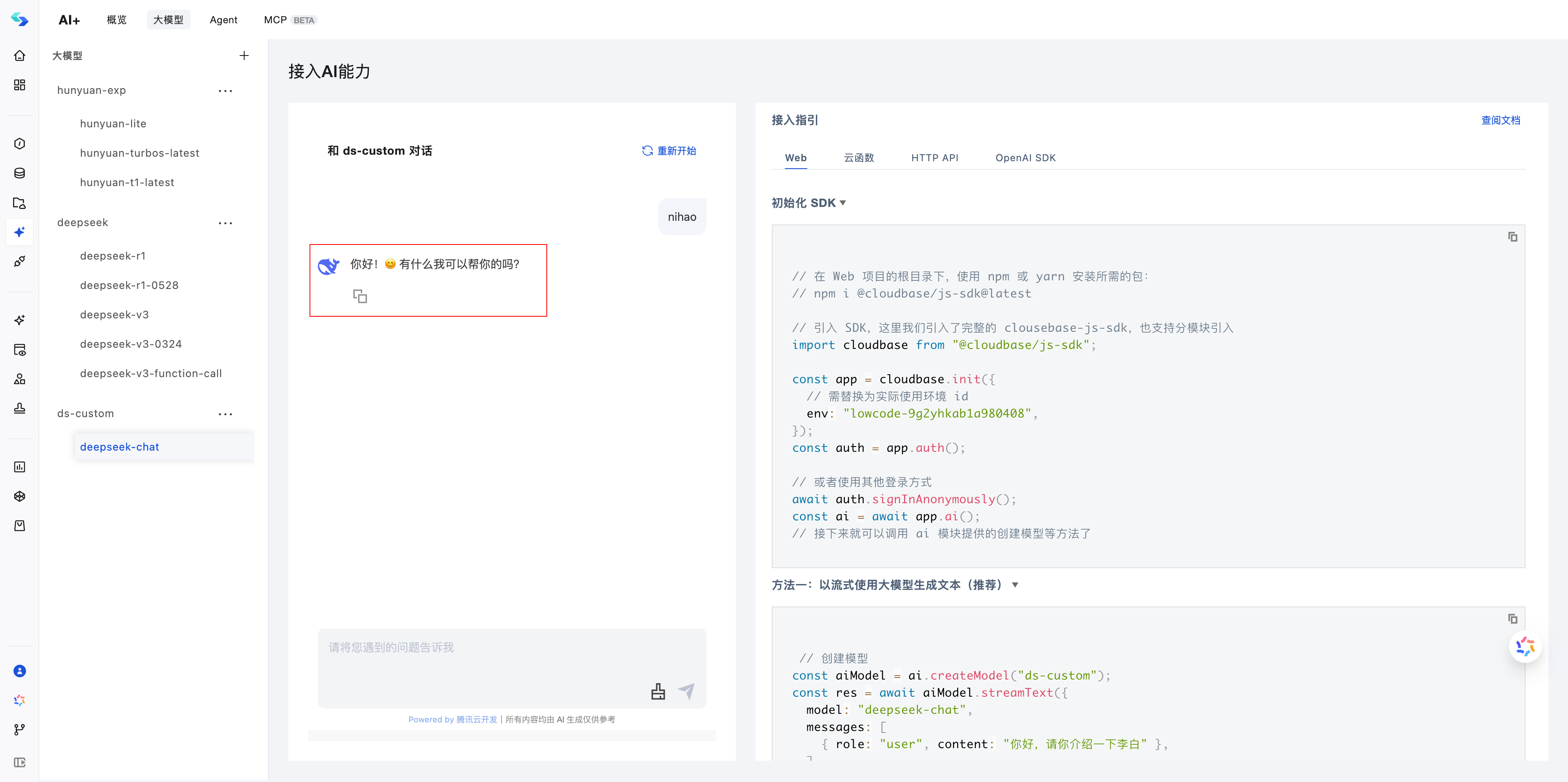

- Wait for the configuration to take effect

- The system requires a few minutes to complete the configuration

- After successful configuration, you will see the following effect:

Frequently Asked Questions

1: How to determine if my large model is compatible with the OpenAI protocol?

A: Large models compatible with the OpenAI protocol typically explicitly state this in their API documentation, or their API structure aligns with OpenAI's interface specifications (e.g., using similar endpoints and parameter structures).

2: What should I do if the large model fails to work properly after configuration?

A: Please check the following points:

- Is the API Key correct and not expired?

- Is the BaseURL correct?

- Is the model name consistent with the provider's documentation?

- Is the network connection normal?

3: How much concurrency does the large model support?

When using tokens provided by the platform, one environment supports 5 concurrent requests.

You can add custom models, modify the configuration for deepseek/hunyuan, or enter a third-party API Key.

After performing the above configuration, the platform will no longer impose concurrency limits. You will need to refer to the restrictions set by the third-party model provider.